This is a real smart switch!

Have you ever tried to ask Alexa to turn on the lights in the living room surrounded by a swarm of screaming children? Difficult eh? And what about in the late evening, would you ask Alexa to turn off the bathroom light which is systematically forgotten on, running the risk of waking up the children? That’s right: you have to get up from the sofa.

In these and many other situations the most suitable and sustainable smart switch ever is a nice piece of cardboard! Do not you believe it? I’ll show you.

No tricks

A few days ago I asked myself how I could use the security cameras installed inside and outside my home to make it more smart.

Well! A silent Alexa replacement would be handy, I said to myself.

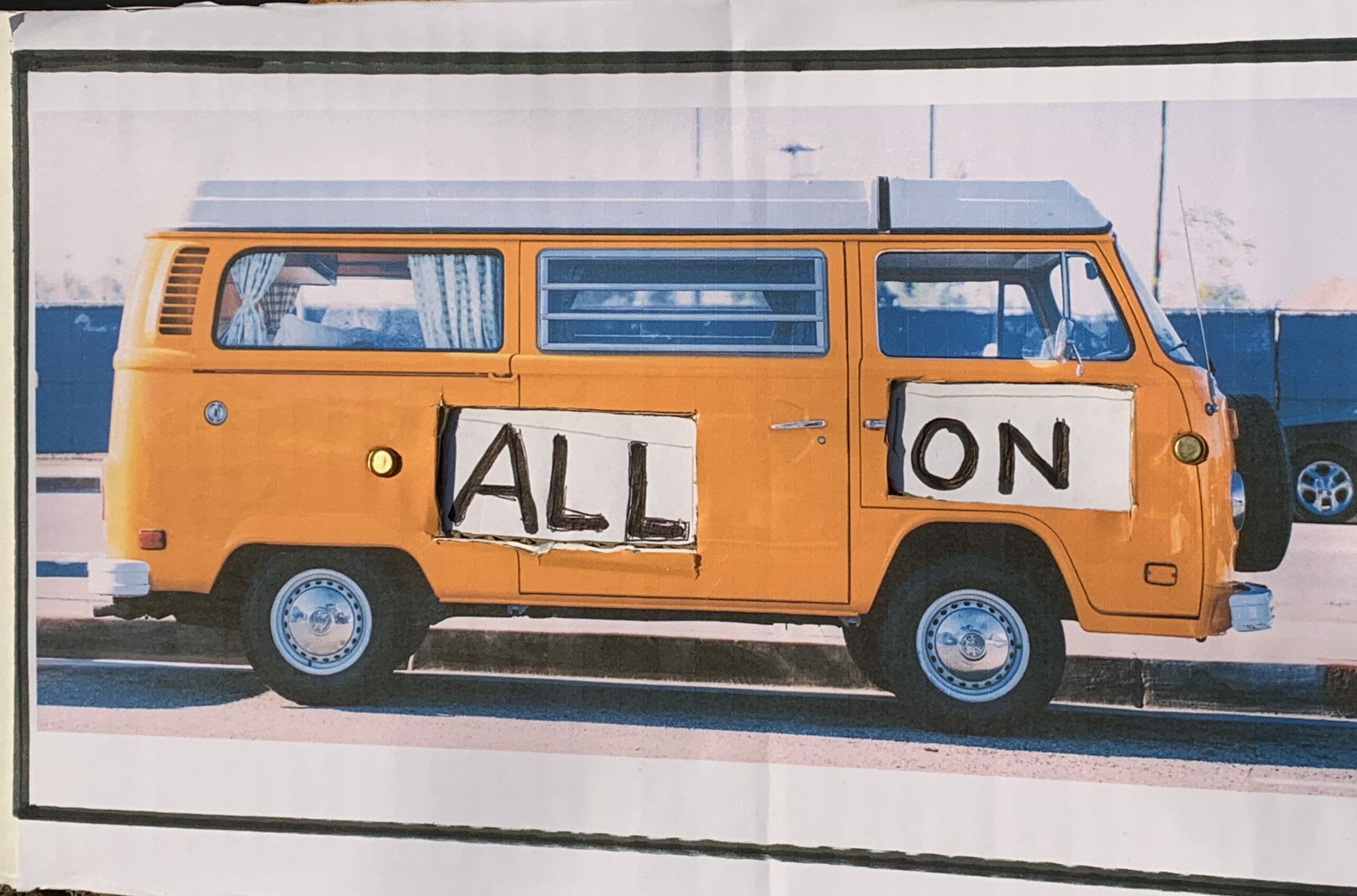

So I tried to find ways to use camera images to generate events, such as turning a light bulb on or off. The idea that came to me is this: identify a well recognizable object and read the text placed above it, a sign in short! A sign that can be used to give commands to our IoT devices.

Object detection

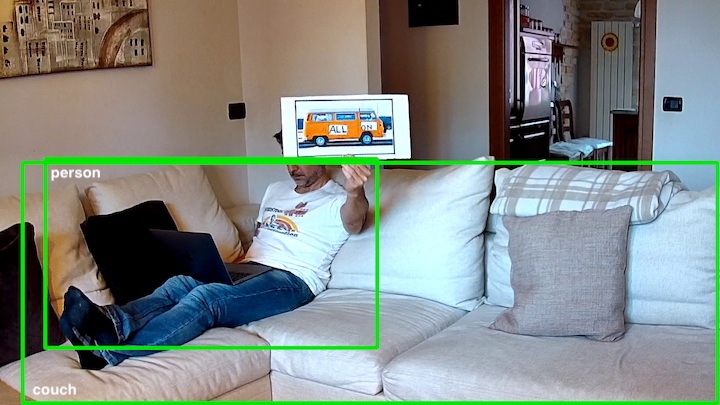

I used a Raspberry PI4 to analyze the video stream of my camera and recognize objects thanks to CV2 library, its DDN (Deep Neural Network) module and the MobileNetSSD V2 Coco model.

Going through the classes of objects recognized by the model, a nice “bus” immediately caught my eye. Perfect! An object easily recognizable and hardly present in my living room, just to avoid incurring false positives. The image I chose to decorate my sign was immediately recognized by the model as a bus. Great!

However, using the image in a different context, the result was not positive. The presence of other recognized objects (person and sofa) and the reduced size of my bus compared to the entire image has compromised its correct recognition.

Finding contours

Do I give up? No way! Our sign is a nice rectangle. Why not detect the rectangles in the image, extract its contents and use it for object detection?

Going to analyze the contours and looking for those that have approximately 4 sides, boom! Found it!

Now I’m able to submit the image contained in the rectangle to the object detection model and, yes! It is correctly recognized as a bus.

Text recognition

I said to myself: our bus is the “activation image”, like the word “Alexa” is the “activation word” when pronounced in the proximity of Echo devices. I just need to submit the image to a text recognition system to identify commands and act accordingly.

I chose a cloud solution, using Amazon Rekognition. When a bus (or truck or car) is identified in the image, it is transferred to an S3 bucket and subjected to the text recognition algorithm. Results? Excellent.

Detected text:ALL ON Confidence: 98.95% Id: 0 Type:LINE

Finished!

We finished! Now we just have to run the commands based on the text returned by Amazon Rekognition. In my case I limited myself to turning on a couple of LEDs connected directly to PI4 but the limit is fantasy!

Source code is here.

Behind the scenes

Few tips about the realization of the same project: the resolution and the position of the cameras are very important and tuning is required to obtain good results. On Raspberry PI4 don’t use very big image resolution: it is necessary to find a compromise between the required quality by the text recognition system and the requested resources for its elaboration.

To install OpenCV on Raspberry please check this link. Bufferless video capture class is from this post. Box recognition is from this page.

We had fun? See you next time!