AWS Lambda offline development with Docker

I work on projects that are increasingly oriented towards the serverless paradigm and increasingly implemented on AWS Lambda platform. Being able to offline develop an AWS Lambda function, comfortably in your favorite IDE, without having to upload the code to be able to test it, allows significantly speed up of activities and increased efficiency.

AWS Lambda environment in docker

That’s right! The solution that allows us to develop AWS Lambda code in offline mode is to use a docker image that replicates in an almost identical way the AWS live environment. The docker images available at DockerHub constitute a sandbox inside to perform its function, sure to find the same libraries, file structure and related permissions, environment variables and production context. Fantastic!

A Lambda function is rarely “independent” of other resources: it often needs to access objects stored in an S3 bucket, queue messages on SQS or access a DynamoDB table. The interesting aspect of this solution is the ability to develop and test your code offline, however interacting with real AWS services and resources, simply by specifying a pair of AWS access keys in the environment variables AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY.

The LambdaCI project is frequently updated and well documented: it includes several runtime environments such as Python, which we will use in the next paragraphs. The environment I used for development is available in this repository.

Example function

Suppose we are working on a simple Python function that deals with processing SQS messages and that uses a package normally not installed in the AWS Lambda Python environment. The example code is as follows.

First the Logger object is instantiated: we are going to use it to trace the SQS event. Addends are foreseen in the body of the message and the result will be reported in the logs. We will also trace the version of the PILLOW package, normally not installed by default in the AWS Lambda environment, to verify that the installation of the additional packages is successful. Finally, a sample text message is returned (“All the best for you”) at the end of the function execution.

Now let’s see how to execute the Lambda function inside a Docker container.

Dockerfile and Docker-Compose

First we need to worry about how to install additional Python packages, in our example PILLOW. We create a new Docker image using a dockerfile based on lambci/lambda:python3.6 image. Let’s install all the additional packages specified in the file requirements.txt

Finally, with a docker-compose.yml file we define a lambda service to be used for offline debugging. The purpose is to map the host directory src for the sources and set PYTHONPATH to use the additional packages in /var/task/lib

As a first test, just start docker-compose to run our Lambda function, passing the handler.

docker-compose run lambda src.lambda_function.lambda_handlerEvents

Our function expects an SQS event to be processed. How to send it? First we need to get a test JSON and save it in a file (for example event.json). Let’s specify it in the docker-compose command line.

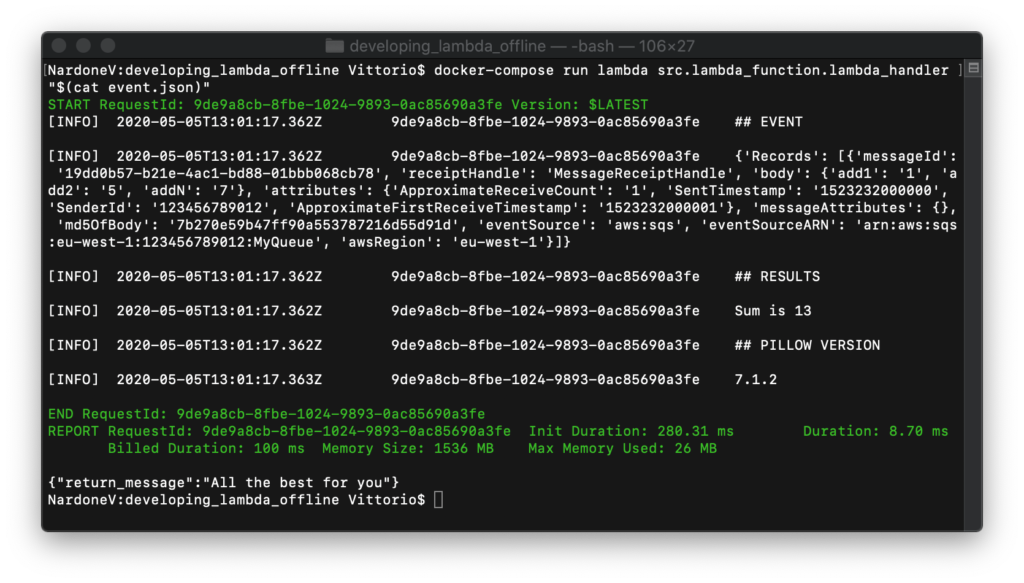

docker-compose run lambda src.lambda_function.lambda_handler "$(cat event.json)"This is the result of execution.

Perfect! Our function is performed correctly and the result corresponds to what is expected. Starting the Docker container corresponds to an AWS Lambda cold start. Let’s see how it is possible to keep the container active to call the function several times.

Keep running

Alternatively, you can start and keep the container of our Lambda function running: you can make several consecutive calls quickly without waiting for the “cold start” times. In this mode, an API server is started which responds by default to port 9001.

docker-compose run -e DOCKER_LAMBDA_STAY_OPEN=1 -p 9001:9001 lambda src.lambda_function.lambda_handlerWe are going to call our function using, for example, curl.

curl --data-binary "@event.json" http://localhost:9001/2015-03-31/functions/myfunction/invocationsThe default handler of our Lambda function responds to the this endpoint. The data-binary parameter allows to send the contents of the JSON file, the sample SQS event.

Conclusions

I collected in this GitHub repository the files needed to recreate the Docker environment that I use for offline development and debugging of AWS Lambda functions in Python. For convenience I have collected the most frequent operations in a Makefile .

The make lambda-build command realizes the deployment package of the function, including the additional packages.

Below is an example of deploying our Lambda function with CloudFormation.

Other commands available in the Makefile are:

## create Docker image with requirements

make docker-build

## run "src.lambda_function.lambda_handler" with docker-compose

## mapping "./tmp" and "./src" folders.

## "event.json" file is loaded and provided to function

make lambda-run

## run API server on port 9001 with "src.lambda_function.lambda_handler"

make lambda-stay-openWe had fun? Would we like to use this environment to build a CI pipeline that tests our function? In the next post!

2 Comments

Leave a Comment

You must be logged in to post a comment.

Hello Vittorio!

Thanks a lot for your article! There’s currently not so much info on the topic in the internet, so each article is like a gold!

I have some problems following your guide: I want deploy the lambda function without using CloudFormation, but I get the following error: “Unable to import module ‘lambda_handler’: No module named ‘lambda_handler'”. I suppose that there’s something wrong with setting up the entry point for the lambda. I set:

`CMD override: lambda_handler.lambda_function`

and leave `ENTRYPOINT override` and `WORKDIR override` empty.

Do you know, what can be a problem?

Hi! Thank you Tony.

Not very sure about your error, it seems you are running lambda function in a docker container using incorrect setup.

To clarify: in my project Docker is used to run lambda function locally and CloudFormation is used to deploy it in a real AWS environment.

Are you trying to deploy function to AWS running it in a container?

I suggest to study Dockerfile, docker-compose.yml and Makefile of project repository. It’s a good starting point.

Feel free to contact me again. Bye!